Adapting Models to Signal Degradation using Distillation

People

Abstract

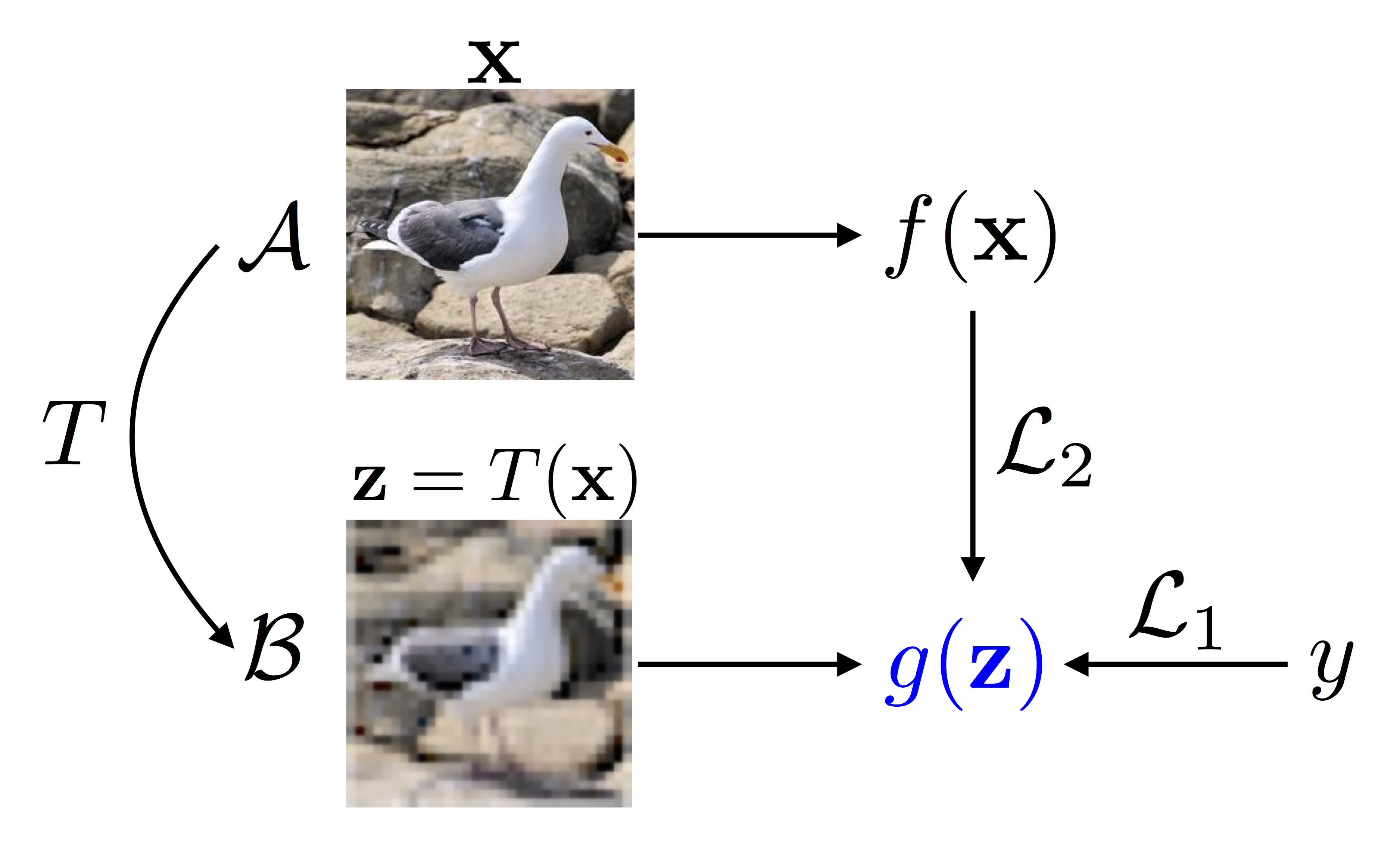

Model compression and knowledge distillation have been successfully applied for cross-architecture and cross-domain transfer learning. However, a key requirement is that training examples are in correspondence across the domains. We show that in many scenarios of practical importance such aligned data can be synthetically generated using computer graphics pipelines allowing domain adaptation through distillation. We apply this technique to learn models for recognizing low-resolution images using labeled high-resolution images, non-localized objects using labeled localized objects, line-drawings using labeled color images, etc. Experiments on various fine-grained recognition datasets demonstrate that the technique improves recognition performance on the low-quality data and beats strong baselines for domain adaptation. Finally, we present insights into workings of the technique through visualizations and relating it to existing literature.

Paper

arXivBibtex

Poster

posterSource Code

Citation

Jong-Chyi Su, Subhransu Maji, "Adapting Models to Signal Degradation using Distillation", British Machine Vision Conference (BMVC), 2017