Running

Once compiled, run the program without any command line arguments (it ignores them). You will see a simple menu system where you can make several selections. First you will be asked what domain you want to use, and you can choose from several canonical domains used in reinforcement learning research: mountain car, puddle world, acrobot, cart-pole, pendulum (swing-up and balance), and bicycle.

Next you will be asked what "order" you want to use. We use multilinear interpolation (the extension of bilinear interpolation to higher dimensions) over a uniform grid of points to estimate the q-function. The "order" that this refers to is the number of points used along each dimension. Notice that the total number of points (weights used by the function approximator) is pow(order, dim), where dim is the dimension of the (continuous) state-space. If you select an order that is too low, then the approximation of the q-function will be poor and the policy may not perform well. If you select an order that is too high, then it may take a large number of iterations of value iteration for the policy to become good, and each iteration may take a long time.

Next you will be asked whether or not you would like to use "value averaging". In short, for anything but bicycle, do not use value averaging. For bicycle, use value averaging. In more detail: if the state-transitions of the domain are stochastic (not deterministic), then value iteration calls for averaging over all possible outcomes of taking an action in a state. If there is a large number of possible outcomes (e.g., in bicycle there are infinite) then we can only sample some possible outcomes. However, to get a good estimate of the true average, we may have to use a massive number of samples, which is computationally expensive. To get around this, you can use "value averaging", wherein only a single sample is used, but the q-values aren't changed to the new values given by the (consistent) Bellman operator - instead they are moved a small amount toward the new amount (we take a weighted average of the old and new values).

You will then be asked which maxQ variant you want to use. Here "Ordinary Bellman" corresponds to performing value iteration using the Bellman operator, as has been done for decades. The option "Consistent Bellman" corresponds to performing value iteration using the newly proposed consistent Bellman operator. We refer to these as "maxQ variants" because the consistent Bellman operator can be viewed as replacing the term in the Bellman operator that takes the maximum over actions with a different term.

You will then be asked how many iterations of value iteration to run, as well as how many threads to use when running value iteration and when evaluating policies. By threading the code, we made it run slower (even if you select only one thread!) for most cases. However, most cases are fast anyway. The threading can help a lot when running the bicycle domain, where we suggest using the number of cores (or hyperthreads) available as the number of threads.

After you select the number of threads, you will be asked how often the policy should be evaluated. If you select 1, then after every iteration of value iteration, the greedy policy with respect to the q-function will be evaluated and the resulting performance stored. However, this can be costly - evaluating the performance of a policy can take a long time on some domains. So, you may wish to only evaluate the policy after every few iterations (e.g., the paper evaluated policies for bicycle after every 10 iterations).

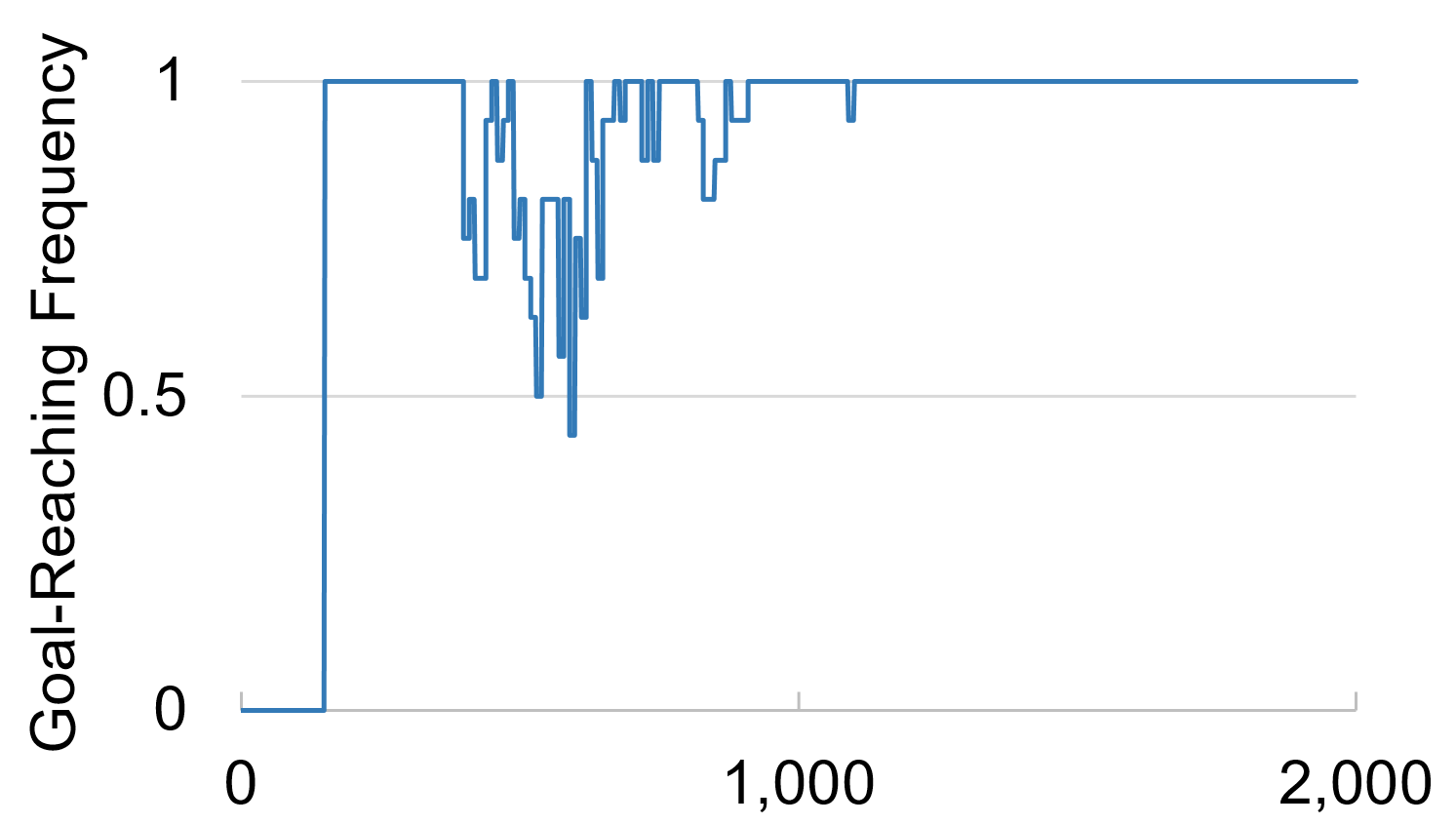

Finally, you will be asked whether you want to print the results to a file. If you select "yes", then you will be asked for a file name to print the results to. The file will contain a sequence of iteration numbers and the corresponding performance of the policy at each iteration. Importantly, the performances are not necessarily the (discounted) returns - they are some meaningful statistic. For example, for bicycle the performance is 0 if the bicycle does not reach the goal and 1 if the bicycle does reach the goal. This is useful for domains where the reward is shaped, and so looking directly at returns isn't particularly informative. Another example is the pendulum domain: the performance is the amount of time during the 20-second episode that the pendulum is near-vertical. A brief description of what statistic is being reported as "performance" is printed to the console while the program runs, and is included at the top of the output file.