Neural Contours: Learning to Draw Lines from 3D Shapes

CVPR 2020

Abstract

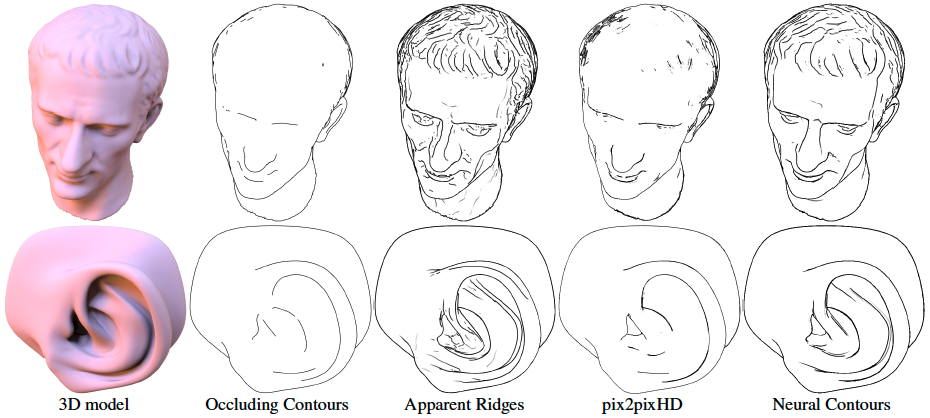

This paper introduces a method for learning to generate line drawings from 3D models. Our architecture incorporates a differentiable module operating on geometric features of the 3D model, and an image-based module operating on view-based shape representations. At test time, geometric and view-based reasoning are combined with the help of a neural module to create a line drawing. The model is trained on a large number of crowdsourced comparisons of line drawings. Experiments demonstrate that our method achieves significant improvements in line drawing over the state-of-the-art when evaluated on standard benchmarks, resulting in drawings that are comparable to those produced by experienced human artists.

Paper

NeuralContours.pdfVideo

Source Code & Data

Github code: https://github.com/DifanLiu/NeuralContours

Dataset: https://www.dropbox.com/s/ufiu97sn4j4h9z0/dataset.zip?dl=0

Acknowledgements

This research is partially funded by NSF(CHS-1617333) and Adobe. Our experiments were performed in the UMass GPU cluster obtained under the Collaborative Fund managed by the Massachusetts Technology Collaborative. We thank Keenan Crane for the Ogre, Snakeboard and Nefertiti 3D models.