Optical Flow Estimation with Channel Constancy

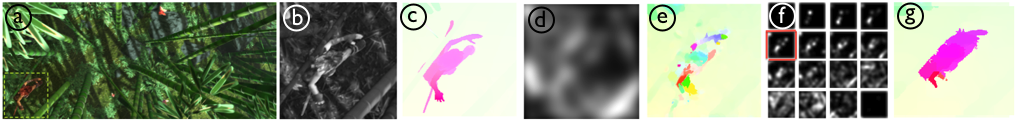

(a) Frame from MPI-Sintel dataset (b) Zoomed detail containing small, fast motion (c) Ground truth optical flow (d) Blurred image showing loss of detail (e) Estimated flow using Classic+NL (f) Blurred Channel Representation (g) Estimated flow using our proposed method (Channel Flow).

Teaser Video:

Abstract:

Large motions remain a challenge for current optical flow algorithms.

Traditionally, large motions are addressed using multi-resolution representations like Gaussian pyramids.

To deal with large displacements, many pyramid levels are

needed and, if an object is small, it may be invisible at the highest levels.

To address this we decompose images using a channel

representation (CR) and replace the standard brightness constancy

assumption with a descriptor constancy assumption.

CRs can be seen as

an over-segmentation of the scene into layers based on some image feature.

If the appearance of a foreground object differs from the background then

its descriptor will be different and they will be represented in

different layers.

We create a pyramid by smoothing these layers, without

mixing foreground and background or losing small

objects.

Our method estimates more accurate flow than the baseline on the MPI-Sintel benchmark, especially

for fast motions and near motion boundaries.

Paper:

Laura Sevilla-Lara, Deqing Sun, Erik G. Learned-Miller and Michael J. Black.

Optical Flow Estimation with Channel Constancy

IEEE European Conference in Computer Vision (ECCV), 2014.

[pdf]